Setting the M&E action objectives; Determine what information the evaluation must provide

How do we Evaluate Programmes?

An organization aiming at programme program quality should establish a system of Monitoring and Evaluation. In fact in order to ensure program quality programme managers need to use the feed back of monitoring and evaluation in order to:

check whether the programme or project is being implemented according to plans and assess whether the programme of project is resulting in the anticipated changes or impacts (thereby fulfilling the basic requirement for a projectized organization);

identify key learning points to feed back in improved programme design and management (thereby fulfilling the basic requirement for a learning organization);

identify the need and the scope to raise the capacity of the human resources of the organization to manage successfully their task and contribute to the generation of a healthy communication climate within the organization and with external stakeholders. (thereby fulfilling the basic requirement for an employee empowering organization).

Building professional reputation and standards in organizational activities and image. There is transparency and accountability in all the aspects of organizational culture and managerial style.

While setting the objectives on should clearly considers how the evaluation reports will be used within the programme cycle management and the requirement for organization development.

| Evaluation Constraints |

Every program planner faces

limitations when conducting an outcome evaluation. You may need to

adjust your evaluation to accommodate constraints such as the following:

These constraints may make the ideal evaluation impossible. If you must compromise your evaluation’s design, data collection, or analysis to fit limitations, decide whether the compromises will make the evaluation results invalid. If your program faces severe constraints, do a small-scale evaluation well rather than a large-scale evaluation poorly. Realize that it is not sensible to conduct an evaluation if it is not powerful enough to detect a statistically significant change. |

What's the problem?

What needs to change to solve it?

How can we bring about those changes?

How will we know that it is working?

Are we doing the right things? (strategy level)

is the justification/rationale of the project coherent?

is the logical model coherent?

Are we doing things right? (Operation level)

Did we do what we said we'd do?

Did it bring about the changes we were seeking? (How? Why/why not?)

Should we be doing it differently?

what was the effectiveness in achieving expected outcomes?

what was the Efficiency in optimizing resources?

what wa sthe level of beneficiary satisfaction?

Did the changes solve the problem?

How do we know?

What is the evidence?

Can we do things better? (Learning level)

which are the better alternatives?

which are the Best practices in this field?

what are the Lessons learnt?

Questions to Ask of Intended Users to Establish an Evaluation’s Intended Influence on Decisions

What decision, if any, is the evaluation finding expected to influence?

When will decisions be made? By whom? When, then, must the evaluation findings be presented to be timely and influential?

What is at stake in the decisions? From whom? What controversies or issues surround the decision?

What’s the history and context of the decision-making process?

What other factors (values, politics, personalities, promises already made) will affect the decision-making? What might happen to make the decision irrelevant or keep it from being made? In other words, how volatile is the decision-making environment?

How much influence do you expect the evaluation to have – realistically?

To what extent has the outcome of the decision already been determined?

What data and findings are needed to support decision making?

What needs to be done to achieve that level of influence?

Who needs to be involved for the evaluation to have that level of influence?

How will we know afterward if the evaluation was used as intended?

----

In fact, I would say we evaluate in order to: •

check whether the programme or project is being implemented according to plan •assess whether the programme of project is resulting in the changes or impacts we anticipated •consider how sustainable the programme or project impact is likely to be, •consider how successfully the programme or project is addressing or having an impact on gender issues •identify key learning and action points to feed back into this programme or project and inform future projects, programmes and policy.

Identifying the domains of change to be monitored involves selected stakeholders identifying broad domains—for example, ‘changes in people’s lives’—that are not precisely defined like performance indicators, but are deliberately left loose, to be defined by the actual users.

How to determine the intended use(s) of the evaluation?

Determining the intended use(s) of an evaluation should involve a negotiation between the evaluator/evaluation team (the person or team who conducts the evaluation process and has responsibility for facilitating use) and the primary intended user(s). In most cases, the evaluation will have multiple uses. By involving all the primary intended users in the process of determining the type of evaluation that is needed, the various perspectives are better represented and users can establish consensus about the primary intended use(s).

1. Making judgments of merit or worth

2. Facilitating improvements

3. Generating knowledge (Patton, 76)

|

Facilitation Questions How could the evaluation contribute to program/project improvement? How could the evaluation contribute to making decisions about the project/program? What outcomes do you expect from the evaluation process? What do you expect to do differently because of this evaluation? |

|

Questions to Ask of Intended Users to Establish an Evaluation’s Intended Influence on Decisions |

| • What decision, if any, is the evaluation finding expected to influence? • When will decisions be made? By whom? When, then, must the evaluation findings be presented to be timely and influential? • What is at stake in the decisions? From whom? What controversies or issues surround the decision? • What’s the history and context of the decision-making process? • What other factors (values, politics, personalities, promises already made) will affect the decision-making? What might happen to make the decision irrelevant or keep it from being made? In other words, how volatile is the decision-making environment? • How much influence do you expect the evaluation to have – realistically? • To what extent has the outcome of the decision already been determined? • What data and findings are needed to support decision making? • What needs to be done to achieve that level of influence? • Who needs to be involved for the evaluation to have that level of influence? • How will we know afterward if the evaluation was used as intended?

|

|

Table 3.1: Checklist of Stakeholder Roles Individuals, groups, or agencies |

To make policy |

To make operational decisions |

To provide input to evaluation |

To react |

For interest only |

|

Developer of the program |

|||||

|

Funder of the program |

|||||

|

Person/agency who identified the local need |

|||||

|

Boards/agencies who approved deliver of the program at local level |

|||||

|

Local funder |

|||||

|

Other providers of resources (facilities, supplies, in-kind contributions) |

|||||

|

Top managers of agencies delivering the program |

|||||

|

Program managers |

|||||

|

Program directors |

|||||

|

Sponsor of the evaluation |

|||||

|

Direct clients of the program |

|||||

|

Indirect beneficiaries of the program (parents, children, spouses, employers) |

|||||

|

Potential adopters of the program |

|||||

|

Groups excluded from the program |

|||||

|

Groups perceiving negative side effects of the program or the evaluation |

|||||

|

Groups losing power as a result of use of the program |

|||||

|

Groups suffering from lost opportunities as a result of the program |

|||||

|

Public/community members |

|||||

|

Others |

|||||

Involving Stakeholders

The first challenge will be to identify the stakeholders. This can be done by looking at documents about the intervention and talking with program staff, local officials, and program participants. Stakeholders can be interviewed initially, or brought together in small groups.

Stakeholder meetings can be held periodically, or a more formal structure can be established. The evaluation manager may set up an advisory or steering committee structure. Tasks can be assigned to individuals or to smaller sub-committees if necessary.

Often there is one key client sponsoring or requesting the evaluation. The needs of this client will largely shape the evaluation. The evaluator, who listens and facilitates discussions about the evaluation’s focus, can summarize, prepare written notes, and provide key stakeholders with options about ways the evaluation can be approached.

By engaging the stakeholders early on, everyone will have a better understanding of the intervention and the challenges it faces in implementation. In addition, the evaluation team will be better informed as to key issues for the evaluation and about what information is needed, when, and by whom. Meeting with key stakeholders helps ensure that the evaluation will not miss major critical issues. It also helps get a "buy in" on the evaluation as stakeholders perceive the evaluation as potentially helpful in attempting to answer their questions.

The extent to which stakeholders are actively involved in the design and implementation of the evaluation depends on several factors. For example, stakeholders may not be able to afford to take time away from their regular duties, or there may be political reasons why the evaluation needs to be seen as independent.

While it may be somewhat unwieldy, involvement of stakeholders in this first step is likely to:

A plan:

§ Identifies the PIP it is part of, and explains how it contributes to the achievement of the PIP’s objectives,

§ Identifies details relevant to the project for each part of a PIP: partners, budgets, inputs, outputs and deliverables,

§ Identifies Who will do What by When for what Cost (cost = spend and people),

§ Contains milestones, showing when key events and outputs will be completed and who is responsible for this, so that progress and performance against the plan can be tracked,

§ Has a clearly identified funding source(s) (either restricted or unrestricted),

§ Has a budget attached (or a one line budget & payments schedule if a grant to a partner – the detailed budget and partner proposal must be attached as an annex),

§ Has an appraisal of the project and implementing organisation(s) attached and states the actions that will be taken to overcome any areas of concern and risks, (See Section 2.8)

§ May be written in English, French, Spanish, or Portuguese if the Regional Director (RD) or Regional Programme Manager (RPM) permits it (except for title and short description which are always written in English).

§ Rapid Onset Projects may be developed to cover the start-up phase of emergency situations

There are no minimum time periods or budgets for Projects.

More than one partner is allowed per project (although it is recommended that authors create separate project plans for each partner). If the work of several partners is combined in one budget and project plan, then the Full Description and the ‘Milestones’ section needs to be clear about which partner is responsible for delivering each part of the plan and who in Oxfam will be accountable for this.

other resources:

IDCR Identifying the Intended Use(s) of an Evaluation

IPDET: Descriptive, Normative, and Cause-Effect Evaluation Designs

----------

Relationship between Program Stages and the Broad Evaluation Question

The first dimension to cover in front-end planning is the relationship between program stages and the broad evaluation question that will be asked. The life of a policy or program can be thought of as something of a developmental progression in which different evaluation questions are at issue at different stages. Pancer & Westhues have presented a typology as shown in Table 3.3.

|

Table 3.3: Typology of the Life of a Policy or Program. Stage of program development |

Evaluation question to be asked |

|

Assessment of social problem and needs |

To what extent are community needs and standards met? |

|

Determination of goals |

What must be done to meet those needs and standards? |

|

Design of program alternatives |

What services could be used to produce the desired changes? |

|

Selection of alternative |

Which of the possible program approaches is most robust? |

|

Program implementation |

How should the program be put into operation |

|

Program operation |

Is the program operating as planned? |

|

Program outcomes/effects/impact |

Is the program having the desired effects? |

|

Program efficiency |

Are program effects attained at a reasonable cost? |

Monitoring is about gathering information that will help us answer important questions about the effectiveness of our programme or project. It is a tool which supports:

· Learning, understanding and motivating. Those involved in monitoring processes learn what is happening at the time and are able to consider the effects; the organisations learn from the individuals’ experiences and future projects benefit.

· Managing the project: reviewing progress and making decisions about changes. Monitoring allows us to follow progress against the programme or project plan, helping us to see whether activities are taking place as planned, and whether they are resulting in the changes that we thought they would. It allows us to check whether the assumptions we have made about how change happens are correct, and to manage risks so that we can maximise the impact we are having, or detect problems or developments we had not anticipated as they arise and make informed decisions on how to adjust resources, activities or perhaps the objectives.

· Being accountable. It helps the beneficiaries and the donors understand how resources are used, what problems are being faced and whether the objectives are being met.

In addition, monitoring provides an opportunity to discuss the problems our partners are facing, and to support them in identifying the causes of these problems and solving them themselves. It can therefore be an important part of ongoing capacity building processes.

Well planned, implemented and documented monitoring will then provide us with a good basis for more in-depth reviews of longer-term and sustained impact, helping us to learn from our experience and build it into future plans and programmes.

Ideally, we should be monitoring the following broad areas; each focuses on different aspects of the project, its management and its wider context:

· progress in implementing activities;

· changes occurring where the project might have had an influence:

o changes in relation to the hoped-for outcomes and impact of the project;

o changes related to risks identified of negative effects;

o unanticipated changes, positive or negative, in the lives of the intended beneficiaries or others;

· checking any assumptions on which the project design was based in order to consider adjustments that need to be made if the assumptions do not hold true;

· changes in the operating context;

o checking for unexpected factors that are influencing the project’s progress, positively or negatively;

o monitoring the changing situation in emergencies.

· organisational capacities of the agencies involved;

· resources - human, material and financial;

· how the project relates to other projects or wider programmes (of the counterpart and/or Oxfam).

Monitoring should be a collaborative process between Oxfam staff, partners

and the communities we work with, seeking to solve problems and adapt projects

as necessary to maximise the impact that can be achieved.

As a guiding principle, those who will

benefit from and use the information should be involved in designing and

implementing the monitoring process ![]() .

When thinking about what the roles of people involved in the monitoring process

will be:

.

When thinking about what the roles of people involved in the monitoring process

will be:

· consider how to involve women and men beneficiaries;

· decide who will be responsible for collecting information, for analysing it, for presenting it and for taking decisions about changes

Monitoring is most effective when it is a continuous process that is built into programme or project design - taking place from the beginning of implementation until, and sometimes beyond, the end of the project. The development of a detailed monitoring process is generally done once the project or programme has been agreed (immediately before or after formal approval, according to the circumstances) - but before activities start.

When developing the monitoring process and plan, it is important to:

· distinguish between our partners' monitoring needs and the monitoring needs for Oxfam’s contribution;

·

establish a shared understanding of the

purpose of monitoring among the different participants and stakeholders ![]() .

This will help to motivate those involved and ensure that participants and

stakeholders are able to provide relevant, timely information;

.

This will help to motivate those involved and ensure that participants and

stakeholders are able to provide relevant, timely information;

· think through how project monitoring will contribute to monitoring the progress of wider programmes - and how the information may need to be combined or supplemented with other sources of information or information from other projects or activities

A variety of approaches can be used for collecting monitoring information and learning about a project or programme. These do not always need to be heavy, time- consuming or costly processes. Some examples include:

· discussions, informal observations and conversations;

·

use of Participatory Learning and Action

(PLA) approaches and other similar approaches that focus on the full

participation of beneficiaries and other stakeholders in the processes of

learning about their needs and opportunities, and in the action required to

address them ![]() (eg

mapping, matrices etc);

(eg

mapping, matrices etc);

· video/cameras, role-plays, drawings;

· written records: record keeping (fact sheets, stock cards etc.), diaries, case studies; surveys with questionnaires or checklists;

· data collected independently by local authorities or other agencies may also be relevant.

A combination of approaches, and a good balance between quantitative information (numbers, statistics demonstrating impact) and qualitative information (people describing impact) will enhance the data available for us to make judgements about the programme or project.

It is not enough to monitor whether projects/activities are being carried out as planned - we need to also ask ourselves, 'are they working?'. Evaluation is the process of using the information we collect to make judgements about our programme or project. 'Evaluation' refers to both an ongoing activity, closely linked with our monitoring process, and to more formal assessments of the performance of a programme or project against its stated objectives at an agreed point in time. We should be doing both - continually thinking about what the information we gather is telling us, as well as conducting more formal assessments at set points in time.

Evaluating our programmes and projects allows us to:

· check whether the programme or project is being implemented according to plan

· assess whether the programme of project is resulting in the changes we anticipated

· consider how sustainable the programme or project impact is likely to be,

· consider how successfully the programme or project is addressing gender issues

· identify key learning and action points to feed back into this programme or project and inform future projects, programmes and policy.

All projects should be evaluated in some way so that the learning from them is recorded and communicated.

Ideally we should be evaluating our programme or project based on the following criteria:

·

Relevance

- the extent to which the project or programme objectives are valid and

appropriate for the priorities and needs of the beneficiaries ![]() .

.

· Effectiveness - the extent to which the objectives are being achieved as a result of the project or programme itself, and the extent to which other factors are influencing the results. (consideration of the wider context in which projects and programmes operate should be an integral part of the evaluation).

·

Impact

- assessing changes that have occurred in the lives of the

intended beneficiaries ![]() , and

the different forces and influences that have contributed to bringing about

these changes. These may be project-related or wider forces and influences.

Impact on other people should also be considered. The changes occurring may be

positive or negative, intended or unintended. The impact may differ for women

and men, people of different ages, different ethnic groups and other social

groupings, and the analysis should consider different groups separately.

See

also ‘how do we measure impact’ below

, and

the different forces and influences that have contributed to bringing about

these changes. These may be project-related or wider forces and influences.

Impact on other people should also be considered. The changes occurring may be

positive or negative, intended or unintended. The impact may differ for women

and men, people of different ages, different ethnic groups and other social

groupings, and the analysis should consider different groups separately.

See

also ‘how do we measure impact’ below

· Cost-effectiveness - an assessment of how much the project or programme has been able to achieve in relation to the resources used and time taken.

· Sustainability - the extent to which the benefits achieved by the project or programme can be continued in an appropriate way after outside assistance has ceased.

· Gender sensitivity - the extent to which men and women have benefited differently in terms of greater control of their lives, resources and changes in responsibilities and gender relations.

· Environmental impact - an assessment of how the natural environment and resources have been affected (both positively and negatively) as a result of the project or programme intervention.

· Organisational support and capacities - the influence that different organisations (e.g. donor, Oxfam, counterpart, community organisations) have had on the project or programme and the organisational capacities that have been developed or will be required in the future.

Keep in mind that the monitoring and evaluation processes themselves may need to be adjusted. For example, we might find that some of the indicators we initially identified are not useful, and need to be modified to reflect changes in the project, as learning is gained from experience and as the operating context changes. Likewise the systems for analysing and presenting the information may need to be adjusted to ensure that they feed efficiently into the learning, decision-making and project reporting processes.

Evaluations should involve as many key stakeholders as possible ![]() . It

is often surprising just how many different stakeholders have an interest in the

project and in its evaluation. A useful way of establishing this is to do a

stakeholder analysis (see tools and resources from the Design stage). The

main groupings are usually:

. It

is often surprising just how many different stakeholders have an interest in the

project and in its evaluation. A useful way of establishing this is to do a

stakeholder analysis (see tools and resources from the Design stage). The

main groupings are usually:

· Intended Beneficiaries with different interests among women and men, different age groups and different social groupings. It is important that the intended beneficiaries have a recognised and dominant stake in the evaluation, since it is in their interests that the project is being undertaken

· Partners possibly with different internal interests.

· Oxfam staff and Trustees including programme, management and advisory staff.

· Donors possibly with different interests at the different levels of staff, management and governing body.

· Others such as local or national authorities, other agencies and policy-makers.

There are likely to be many potential stakeholders, all with different interests in the evaluation. It is very important that all the project stakeholders have a common understanding about why the evaluation is happening, what it is looking at, and how the information will be used.

The approach and methods used in an evaluation will be determined by the reasons

for which it is being undertaken, who is taking responsibility and who needs to

be involved ![]() .

However, it is possible to identify guiding principles that should always be

considered. In general, the overall approach and methods selected for an

evaluation should:

.

However, it is possible to identify guiding principles that should always be

considered. In general, the overall approach and methods selected for an

evaluation should:

· seek to involve women and men beneficiaries in the design and implementation of the evaluation;

· encourage learning by, and empowerment of, women and men beneficiaries and staff involved - and not appear alien or threatening;

· satisfy the stakeholders who will be using the findings;

· consider using a range of sources of information and encourage cross-checking of findings. Methods that could be used include Participatory Learning and Action (PLA) techniques, interviews, surveys, group meetings and workshops. The use of secondary data, such as official statistics and data from the monitoring system to support more qualitative data also needs to be considered;

· include time for reflection and ways of enabling stakeholders to learn and provide feedback during the process, in order to promote shared ownership of the findings and recommendations.

Ideally, a balanced strategy will be identified that considers at least some of the interests of all the main stakeholder groups. However, it will often be impossible for one evaluation to satisfy all the needs of all the stakeholders. Once the various needs have been explored, it will be important to prioritise them and decide which will, and which will not, be met by the evaluation.

To evaluate a programme, to say what value it has had for the target communities, we need to be able to produce sound evidence of exactly what impact the programme has had on their lives. This may be the impact that we planned that it would have, but there may be others, positive or negative.

The term commonly used to talk about this evidence is "impact indicators". These are signs (facts, figures, statistics, collections of personal testimonies etc) that indicate what effect, or impact the programme is having.

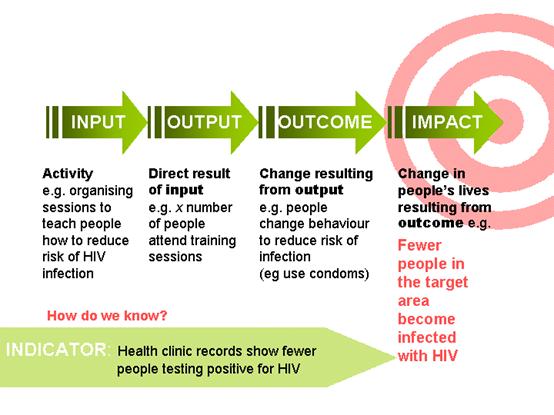

However, a common mistake is to measure only whether the planned activities took place, or their immediate outcomes. But measuring activities actually only indicates what processes we have used to try to achieve a longer term impact - these are process indicators. They don't tell us if the programme has had the desired impact.

Activities have outcomes, which may or may not lead to the desired impact. For example, an outcome of activities like training community members in how to reduce the risk of becoming infected with HIV, may be that people start buying more condoms. The sales figures for condoms in the area and people's reports in surveys and questionnaires that they are buying and using them indicate that the activities have had this outcome - these are outcome indicators. They don't tell us if the programme has had the desired impact either.

The impact is only achieved if the condoms are used correctly and consistently and this leads to a measurable reduction in HIV infections. Statistics at STI clinics, surveys and questionnaires may provide the evidence that will indicate to what extent this impact has been achieved - these are impact indicators.

Here's a summary of the input to impact chain and how it works:

Web Resources

IDCR : Preparing Program Objectives

Overseas Development Instrument: Evaluation Follow-up: Types Of Use http://www.odi.org.uk/alnap/modules/m2/pdfs/10_2.pdf

Utilization-Focused Evaluation: http://www.wmich.edu/evalctr/checklists/ufechecklist.htm

Weiss, Carol. Evaluating Capacity Development: Experiences from Research and Development Organizations Around the World (Ch. 7, Using & Benefiting from an Evaluation). http://www.agricta.org/pubs/isnar2/ECDbook(H-ch7).pdf